Nine years ago, a well-known pharmacologist hosted a researcher from another university in his lab. On a Saturday night last September, he learned while surfing Google Scholar that they had published a paper together.

Nine years ago, a well-known pharmacologist hosted a researcher from another university in his lab. On a Saturday night last September, he learned while surfing Google Scholar that they had published a paper together.

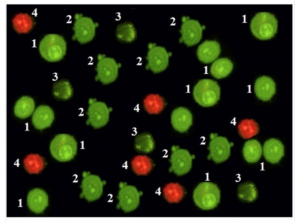

Marco Cosentino, who works at the University of Insubria in Italy, know that Seema Rai, a zoologist at Guru Ghasidas Vishwavidyalaya in India, had collected data during during her six months in his lab, but had warned her they were too preliminary to publish. She published the data — on melatonin’s role in immunity — anyway, last summer in the Journal of Clinical & Cellular Immunology, listing Cosentino as the second author.

The day after he discovered the paper, Cosentino sent an email to the editor in chief of the journal, Charles Malemud, explaining why he did not approve of the publication:

Continue reading Unwitting co-author requests retraction of melatonin paper