Can playing first-person shooter video games train players to become better marksmen?

Can playing first-person shooter video games train players to become better marksmen?

A 2012 paper — titled “Boom, Headshot!” — presented evidence to suggest that was, in fact, true. But after enduring heavy fire from critics (one of whom has long argued video games have little lasting impact on users), the authors are planning to retract the paper, citing some irregularities with the data. Although the journal has apparently agreed to publish a revised version of the paper, last year the researchers’ institution decided to launch a misconduct investigation against one of the two co-authors.

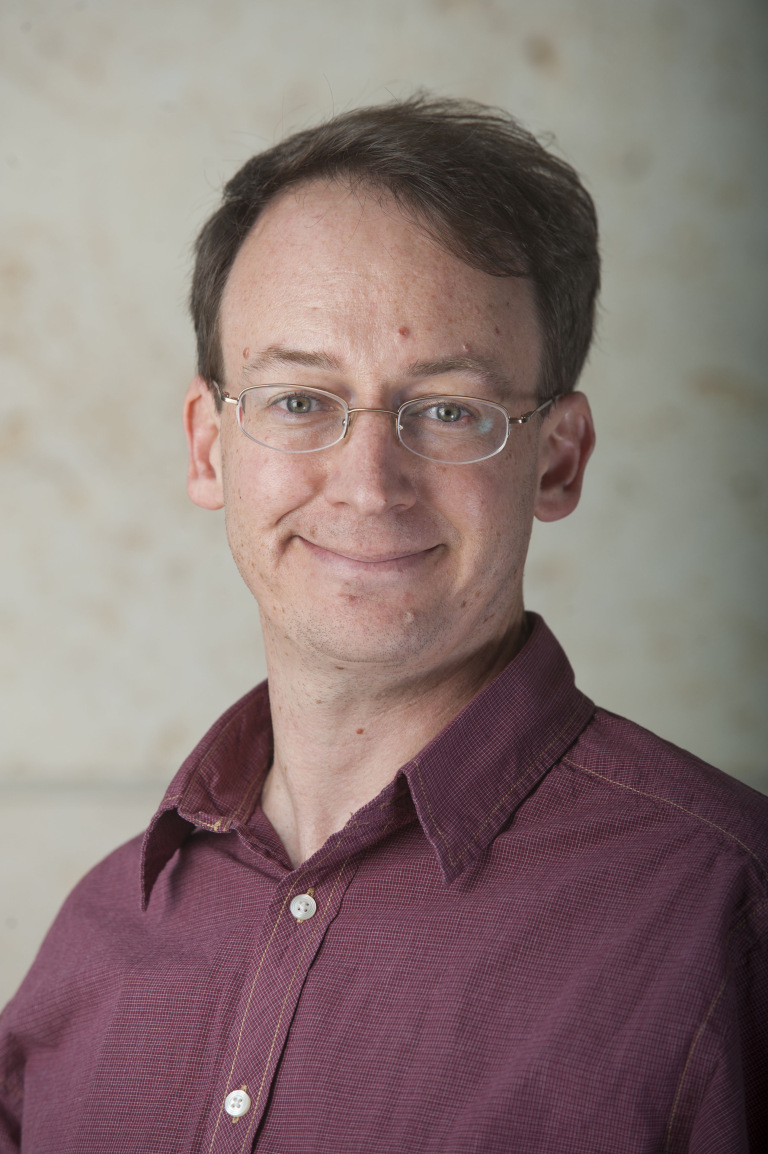

Brad Bushman, a professor of communication and psychology at the Ohio State University, headed the research along with then-postdoc Jodi Whitaker, now an assistant professor at the University of Arizona.

According to a recent email from the editor of Communication Research to two critics of the paper, the retraction notice will look something like this: Continue reading Dispute over shooter video games may kill recent paper

The BMJ has released a

The BMJ has released a