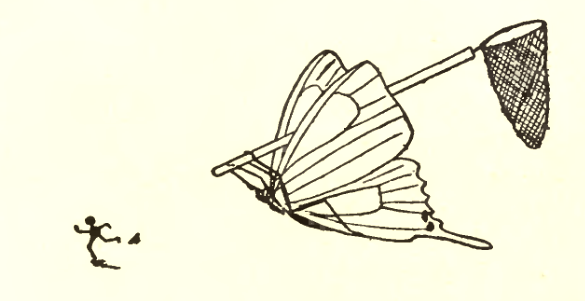

Talk about missing the trees for the, ahem, forest plots. A researcher is accusing an Elsevier journal of refusing to retract a study that depends in large part on a flawed reference.

The paper, “The effect of Acupressure and Reiki application on Patient’s pain and comfort level after laparoscopic cholecystectomy: A randomized controlled trial,” appeared in early April in Complementary Therapies in Clinical Practice and was written by a pair of authors from universities in Turkey.

The article caught the attention of José María Morán García, of the Nursing and Occupational Therapy College at the University of Extremadura in Caceres, Spain. Morán noticed that what he considered a critical underpinning of the paper was a 2018 meta-analysis (also by authors from Turkey) with a major flaw: According to Morán and a group of his colleagues, the meta-analysis — also in Complementary Therapies in Clinical Practice — showed the opposite of what its author stated. Indeed, they’d made the case to the journal back in 2018, when the meta-analysis first appeared in a paper titled “Misinterpretation of the results from meta-analysis about the effects of reiki on pain.”

Continue reading Oh, the gall(stones): A journal should retract a paper on reiki and pain, says a critic

Title:

Title:

Can seeing a weapon increase aggressive thoughts and behaviors?

Can seeing a weapon increase aggressive thoughts and behaviors?  A cardiology journal has retracted a 2016 meta-analysis after the editors had an, ahem, change of heart about the rigor of the study.

A cardiology journal has retracted a 2016 meta-analysis after the editors had an, ahem, change of heart about the rigor of the study. Reuters has removed a story about gender confirmation surgery, saying it included problematic data.

Reuters has removed a story about gender confirmation surgery, saying it included problematic data.