Before we present this week’s Weekend Reads, a question: Do you enjoy our weekly roundup? If so, we could really use your help. Would you consider a tax-deductible donation to support Weekend Reads, and our daily work? Thanks in advance.

Sending thoughts to our readers and wishing them the best in this uncertain time.

The week at Retraction Watch featured:

- More questions about a paper about hydroxychloroquine and COVID-19.

- More than 40 retractions or expressions of concern for papers that likely reported on organ transplants from executed prisoners in China.

- The tale of the secret publishing ban.

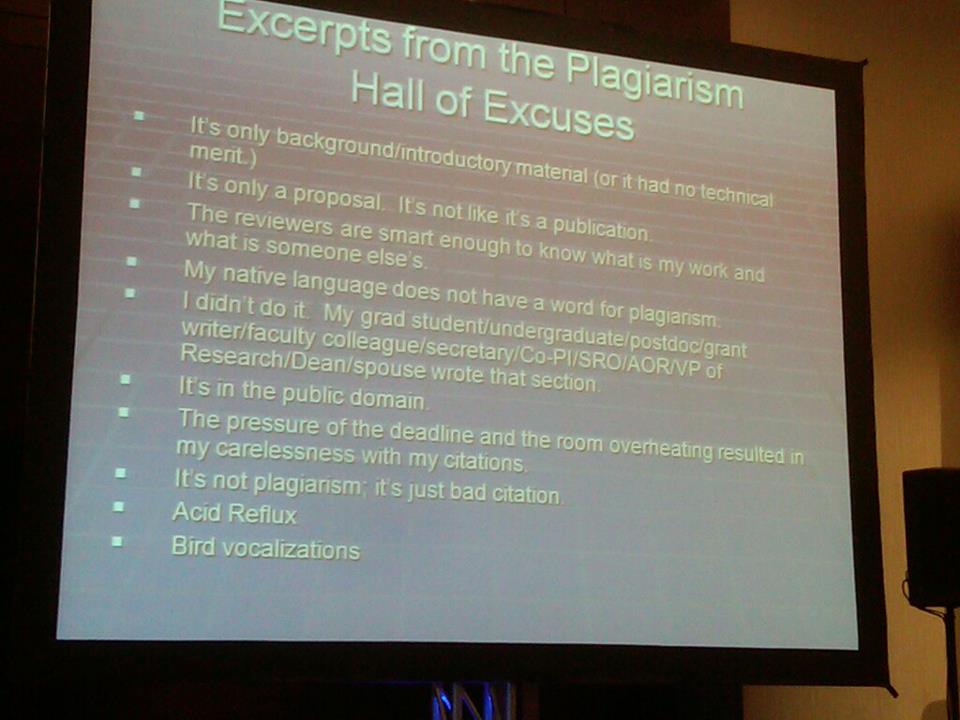

- A paper that plagiarized a paper that plagiarized — but isn’t being retracted.

Here’s what was happening elsewhere:

Continue reading Weekend reads: The effects of coronavirus on the literature; a sting involving Big Bird; a made-up name appears in a medical journal