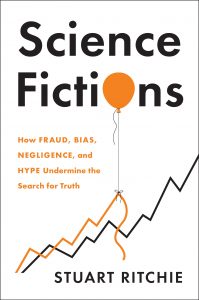

We’re pleased to present an excerpt from Stuart Ritchie’s new book, Science Fictions: How Fraud, Bias, Negligence, and Hype Undermine the Search for Truth.

One of the best-known, and most absurd, scientific fraud cases of the twentieth century also concerned transplants – in this case, skin grafts. While working at the prestigious Sloan-Kettering Cancer Institute in New York City in 1974, the dermatologist William Summerlin presaged Paolo Macchiarini—an Italian surgeon who in 2008 published a (fraudulent) blockbuster paper in the top medical journal the Lancet on his successful transplant of a trachea—by claiming to have solved the transplant-rejection problem that Macchiarini encountered. Using a disarmingly straightforward new technique in which the donor skin was incubated and marinated in special nutrients prior to the operation, Summerlin had apparently

grafted a section of the skin of a black mouse onto a white one, with no immune rejection. Except he hadn’t. On the way to show the head of his lab his exciting new findings, he’d coloured in a patch of the white mouse’s fur with a black felt-tip pen, a deception later revealed by a lab technician who, smelling a rat (or perhaps, in this case, a mouse), proceeded to use alcohol to rub off the ink. There never were any successful grafts on the mice, and Summerlin was quickly fired.

Drumroll please.

Drumroll please. A new analysis of retractions from Korean journals reveals some interesting trends.

A new analysis of retractions from Korean journals reveals some interesting trends.