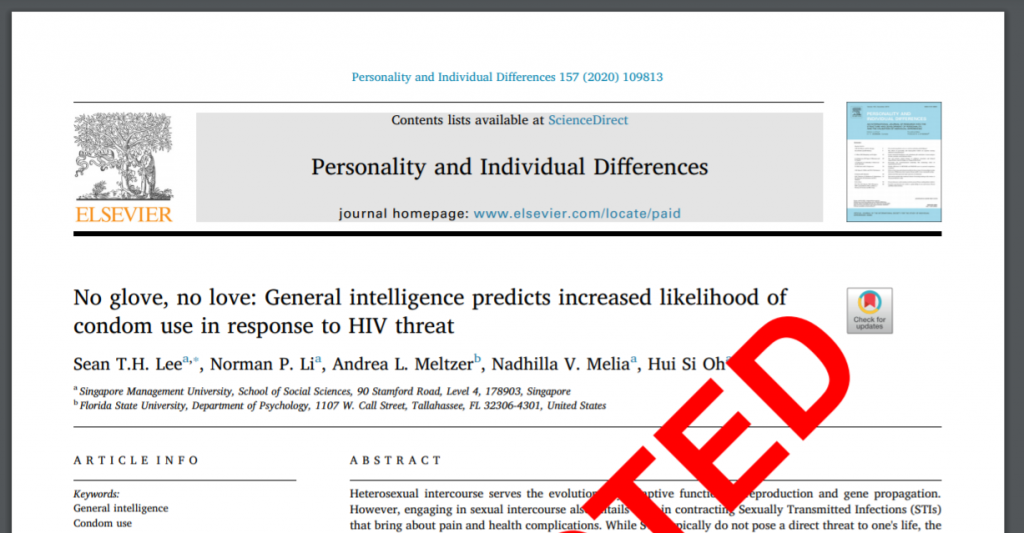

A journal has retracted a controversial 2010 article on intelligence and infections that was based on data gathered decades ago by a now-deceased researcher who lost his emeritus status in 2018 after students said his work was racist and sexist.

The article, “Parasite prevalence and the worldwide distribution of cognitive ability’, was published in Proceedings of the Royal Society B, by a group at the University of New Mexico. Their claim, according to the abstract:

The worldwide distribution of cognitive ability is determined in part by variation in the intensity of infectious diseases. From an energetics standpoint, a developing human will have difficulty building a brain and fighting off infectious diseases at the same time, as both are very metabolically costly tasks.

Overlaying average national IQ with parasitic stress, they found “robust worldwide” correlations in five of six regions of the globe:

Continue reading Do some IQ data need a ‘public health warning?’ A paper based on a controversial psychologist’s data is retracted