Clarivate has removed the mega-journal Cureus from its Master Journal List, according to the October update, released today.

The move means Cureus will no longer be indexed in Web of Science or receive an impact factor. As we have reported, it can also mean researchers are less likely to submit to the journal, given universities rely on such metrics to judge researchers’ work for tenure and promotion decisions.

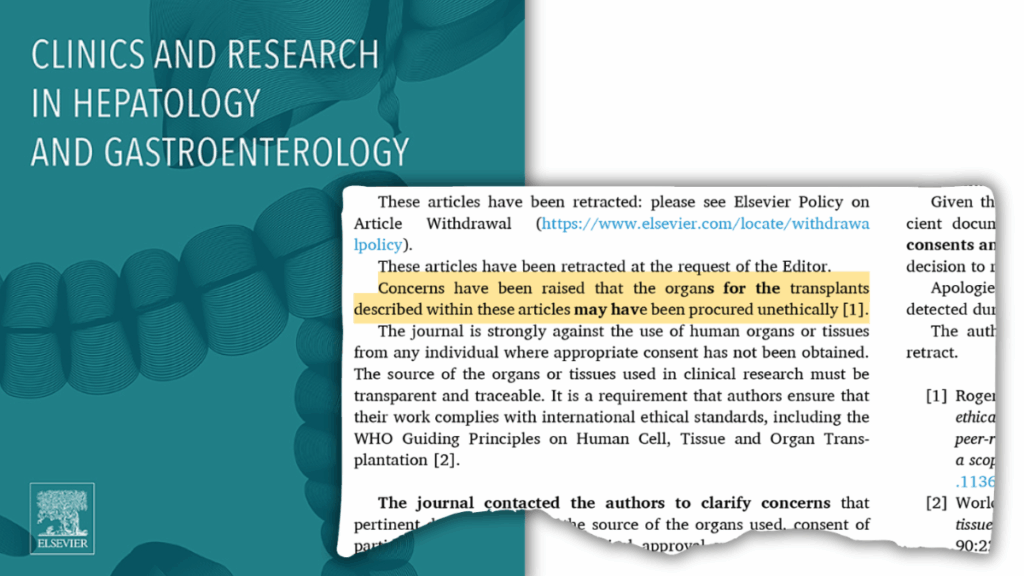

Clarivate put indexing for the journal on hold last September for concerns about article quality, which the journal has been criticized for in the past.

Continue reading Embattled journal Cureus delisted from Web of Science, loses impact factor