The reviewer, a neuroscientist in Germany, was confused. The manuscript on her screen, describing efforts to model a thin layer of gray matter in the brain called the indusium griseum, seemed oddly devoid of gist. The figures in the single-authored article made little sense, the MATLAB functions provided were irrelevant, the discussion failed to engage with the results and felt more like a review of the literature.

And, the reviewer wondered, was the resolution of the publicly available MRI data the manuscript purported to analyze sufficient to visualize the delicate anatomical structure in the first place? She turned to a colleague who sat in the same office. An expert in analyzing brain images, he confirmed her suspicion: The resolution was too low. (Both researchers spoke to us on condition of anonymity.)

The reviewer suggested rejecting the manuscript, which had been submitted to Springer Nature’s Brain Topography. But in November, just a few weeks later, the colleague she had consulted received an invitation to review the same paper, this time for Scientific Reports. He accepted out of curiosity. A figure supposed to depict the indusium griseum but showing a simple sinus wave baffled him. “You look at that and think, well, this is not looking like an anatomical structure,” he told us.

Like his colleague who had looked at the manuscript before him, he also felt the text read like technobabble produced by a large language model – full of scientific-sounding sentences without much real meaning.

The two reviewers compared notes. Based on the first review, the author had swapped the irrelevant MATLAB functions in the text for others. But the results and images remained the same. “Nice try, my friend,” the second reviewer recalled thinking. “But forget it.”

At the end of November, however, he again was asked to review a manuscript by the same author, associate professor Eren Öğüt of Istanbul Medeniyet University, a public institution. The new paper, submitted to Neuroinformatics, dealt with a different brain structure, but the abstract geometric shapes it presented were beginning to look familiar.

With growing suspicion, the reviewer looked Öğüt up online. In 2025 alone, the Turkish academic had published 25 papers – nearly all of them in Springer Nature journals – and 12 of those were single-authored. Perhaps even more remarkably, Öğüt also managed to review nearly 650 papers that year, according to Clarivate’s Web of Science. Among the more than 1,400 total reviews he has done, 379 were for Elsevier, 225 for Wolters Kluwer Health and 139 for Springer Nature.

Öğüt has also served as an editor for several journals from major publishers and is currently listed as an associate editor of Springer Nature’s European Journal of Medical Research. He teaches classes on anatomy and neuroanatomy and claims to be a member of Sigma Xi, a scientific honor society based in the United States.

To the two reviewers in Germany, Öğüt’s level of productivity did not seem humanly possible. Rather, they told us, it appears to be accomplished through reckless use of generative AI. One indication they could be right: Öğüt’s reviews average 364 words each, which is just a single word more than the average review length calculated from 11 million reviews.

Extensive review activity can help burnish a resume, the reviewers told us, and journal editors might also be more friendly toward manuscripts coming from a diligent reviewer.

‘Rigorous’ peer review

Öğüt defended his publications, telling us some had been under way for several years, and said his review activity reflected a team effort.

“The appearance of both newly completed and previously developed studies being published within the same year was coincidental rather than indicative. Importantly, all manuscripts were submitted through standard journal procedures and underwent rigorous editorial handling and peer-review processes,” he told us by email.

“We use AI tools for editing or improving sentence clarity, just as many other researchers do,” he added. “In fact, in some manuscripts, in line with editorial and reviewer recommendations, we explicitly state that AI was used for editing purposes.”

Öğüt said he serves “as a reviewer and editor for many journals” and makes “every effort to meet deadlines as promptly as possible. Moreover, I work with a dedicated team who support and assist me throughout these processes.”

He also worried about the discussion of his unpublished work “outside the formal peer-review process,” which he said could “constitute an ethical violation.”

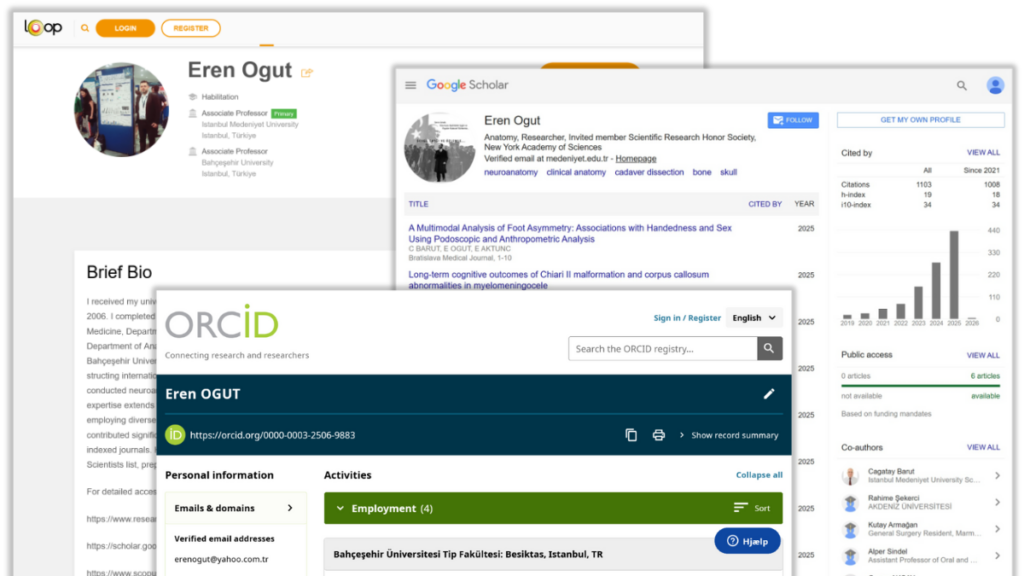

But after we reached out to him, his profiles at Google Scholar, ORCID and Frontiers’ Loop all vanished.

In an email from December that Retraction Watch has seen, the reviewers laid out their concerns to John Van Horn, editor of Neuroinformatics, a Springer Nature title. Three of Öğüt’s single-authored research papers from 2025 were published in that journal, they noted, and they all seemed to follow the same template.

“The pattern of his single-authored articles and the manuscript that I reviewed was quite similar, with a similar style of title formation, redundant and/or noninformative figures, description of MATLAB functions that are not relevant for the research question at hand, and the discussion that resembles a literature review without engaging with the results of the manuscript,” the email stated. “Last but not least, the author never [shows the structures he modeled overlaid on] real MRI images, does not share the data or code, and even states that ‘No datasets were generated or analysed during the current study.’”

Under investigation

In a statement, Van Horn told us the journal had also developed “concurrent” concerns about Öğüt’s work “late last year,” and that “the manuscript submissions from this author to Neuroinformatics, past and current, have been referred to the Springer Nature Research Integrity Group for their detailed examination.”

Tim Kersjes, head of Research Integrity, Resolutions at Springer Nature, told us he could not share any details while the investigation is ongoing, “but we want to assure you that we take this matter extremely seriously.”

“We are grateful to the research community for bringing these concerns to our attention,” he said in a statement.

One of the papers in Neuroinformatics the reviewers’ email mentioned is titled “Integrated 3D Modeling and Functional Simulation of the Human Amygdala: A Novel Anatomical and Computational Analyses.” It purports to use a method called elastic shape analysis developed in 2017 by the mathematician Anuj Srivastava and his colleagues, who used the technique to model the amygdala and other brain structures in a 2022 article. Öğüt’s paper cites this work and, strangely, arrives at a specific quantitative result – 38% – that it describes as also a finding of Srivastava’s 2022 paper. Yet the number, purportedly representing the “lateral bulging” of the amygdala in people with post-traumatic stress disorder, does not appear in Srivastava’s article.

“The value of 38% was not intended to indicate an exact numerical identity with Wu et al. (2022), but rather to reflect a representative magnitude of variance typically explained by PC1 in SRNF-based elastic shape analyses,” Öğüt told us. “We acknowledge that explicitly noting this as an approximation would have avoided potential confusion.”

Srivastava, who is an incoming professor at Johns Hopkins University in Baltimore, told us that at least on first reading, Öğüt’s paper seemed “sub standard.”

“The methodological details are missing, the information is superficial, the equations are mis-typed, the procedure is not reproducible, and so on. The paper repeatedly mentions our method (’Elastic Shape Analysis’) but it does not give evidence on how they used our method,” Srivastava said.

Jennifer S. Stevens, an associate professor of psychiatry and behavioral sciences at Emory University in Atlanta and a coauthor of Srivastava’s 2022 paper, also found Öğüt’s work problematic. ”Since the methods and results are very dense and confusing,” she said, “I can’t develop a formal response at this moment, but it does seem to be concerningly vague.”

To Srivastava, Öğüt’s publication raises a larger question: “I am wondering how this paper got accepted in the first place,” he said.

Like Retraction Watch? You can make a tax-deductible contribution to support our work, follow us on X or Bluesky, like us on Facebook, follow us on LinkedIn, add us to your RSS reader, or subscribe to our daily digest. If you find a retraction that’s not in our database, you can let us know here. For comments or feedback, email us at [email protected].

Since Öğüt is an associate editor of European Journal of Medical Research.

Please admire the quality of articles published in this journal : https://pubpeer.com/publications/4D10AB3D8478B70ADEF8539F5CB17F