That was the emotional sequence for some significant number of researchers around the world on Friday. In the space of several hours, they received word that they were among the scientific 1% — the most cited researchers on the planet — then learned that…well, they actually weren’t.

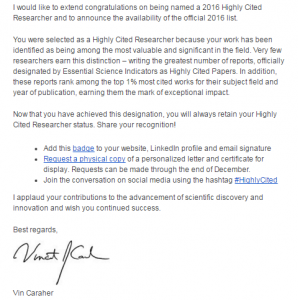

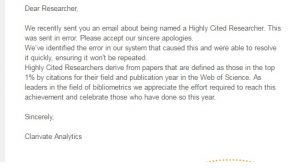

Here’s the letter — subject line, “Congratulations Highly Cited Researcher!” — and the retraction…erm, apology (click to enlarge):

The email even included a link to request a “Highly Cited Researchers 2016 Certificate.”

Clarivate Analytics, which recently acquired Thomson Reuters’ scientific division, would not provide Retraction Watch with a specific number, but said that

There were a number of people who received the letter in error. However, the number we should be focused on are the 3,265 Highly Cited Researchers (HCRs) for 2016 who are to be celebrated. Highly Cited Researchers derive from papers that are defined as those in the top 1% by citations for their field and publication year in the Web of Science. As leaders in the field of bibliometrics we appreciate the effort required to reach this achievement and celebrate those who have done so this year.

A number of the “lucky,” however, took to Twitter, using the #HighlyCited hashtag in a way that Clarivate hadn’t intended:

The thrill of victory and the agony of defeat all in one day!#HighlyCited @thomsonreuters

I guess I need to return my badge. pic.twitter.com/8RtaxPDJ5T— Andrew McAdam (@McAdam_lab) November 18, 2016

First I received news that I was a #HighlyCited researcher.. then Thomson Reuters took it back 😂

— Sachia (@sachiaBIO) November 18, 2016

Now, we all make mistakes, and Clarivate seems to have owned up to this one quite quickly. In their words:

The error occurred internally with our email system. It was corrected quickly and we emailed apologies to those who received the incorrect email.

They add:

We take HCRs very seriously and since correcting this error, we are confident it won’t be repeated.

But this is the second year in a row that the “highly cited” list has had a rocky reception: One of 2015’s highly cited researchers was Bharat Aggarwal, who now has 18 retractions to his name.

Like Retraction Watch? Consider making a tax-deductible contribution to support our growth. You can also follow us on Twitter, like us on Facebook, add us to your RSS reader, sign up on our homepage for an email every time there’s a new post, or subscribe to our daily digest. Click here to review our Comments Policy. For a sneak peek at what we’re working on, click here.

There’s an interesting study question – are authors with retractions over-represented in this highly cited researcher’s list?

My lab head has one of the top-100 most highly cited papers ever. This has made it very clear to us how you get so many citations. It’s a methods paper, and everyone who has ever used that method cites it. This will get you WAY more cites than making a discovery, because after a few years discoveries are common knowledge and needn’t be cited, but if you use the Method of F, you always have to cite F.

It is far from his most important or influential paper, though. (I have an immediate pick for which one that would be, and it’s well cited but nowhere close to the top 100.)

Perhaps science would be better served if people actually published more papers about “how to do it well” rather than speculative “complete stories” that may not even stand scrutiny. The fact that citations favour the former over the latter is probably a feature, not a bug.

I understand your misgivings about the fact that it’s “only a methods paper”. But don’t forget that many Nobels go to people who spent their careers on developing either good methods or the basic science that leads to good measurement methods. Think fMRI here, or Rosalyn Yalow’s invention of the radioimmunoassay. So while we put a premium on discoveries, we should perhaps place an even bigger premium on the robust, valid, and reliable measures and methods that get us there.

Indeed, the supplement series of a journals family (mainly tables) that I used to work on was much more highly cited than its parent.

I find it utterly humiliating to say ” There were a number of people who received the letter in error. However, the number we should be focused on are the 3,265 Highly Cited Researchers (HCRs) for 2016 who are to be celebrated” . Yeah you focus on the 3265 HCR and the other ones ( like me) should just shut up !!! I think that people who have been “demoted” from stardom to oblivion should sue because the way it has been handled is humiliating. I will personally investigate all manners to get Thomson Reuters to pay and anybody who is interested could reach me at my email : [email protected]

I received the mail, the badge, the congratulations of Vin Caraher, and applauses for my “contributions to the advancement of scientific discovery and innovation”. However, the hyperbolic wording sounded more than suspicious. So…

My first though was: Nice spam, even hyperlinks look good enough to click.

My second though was: should be an experiment carried out by some students in psychology. They just want to know how many researchers will click on the link “Request a physical copy of a personalized letter and certificate for display”.

My third though (after receiving the “status update”): a feeling of relief to know that I’m not (and will never be) an HCR, and a sense of pride on having resisted this temptation to request for my certificate… Vanitas vanitatum, omnia vanitas.

*thought*

This was ugly mistake. No better way to make TR pay for that than moving from WoS to other databases e.g. Scopus

Note that TR sold their IP business (including Web of Science) to Clarivate Analytics.

http://ipscience.thomsonreuters.com/