In an episode reminiscent of the AI-generated graphic of a rat with a giant penis, another paper with an anatomically incorrect image has been retracted after it attracted attention on social media. The authors admit using ChatGPT to make the diagram.

According to the retraction notice published July 12, the article, by researchers at Guangdong Provincial Hydroelectric Hospital in Guangzhou, China, was retracted after “concerns were raised over the integrity of the data and an inaccurate figure.”

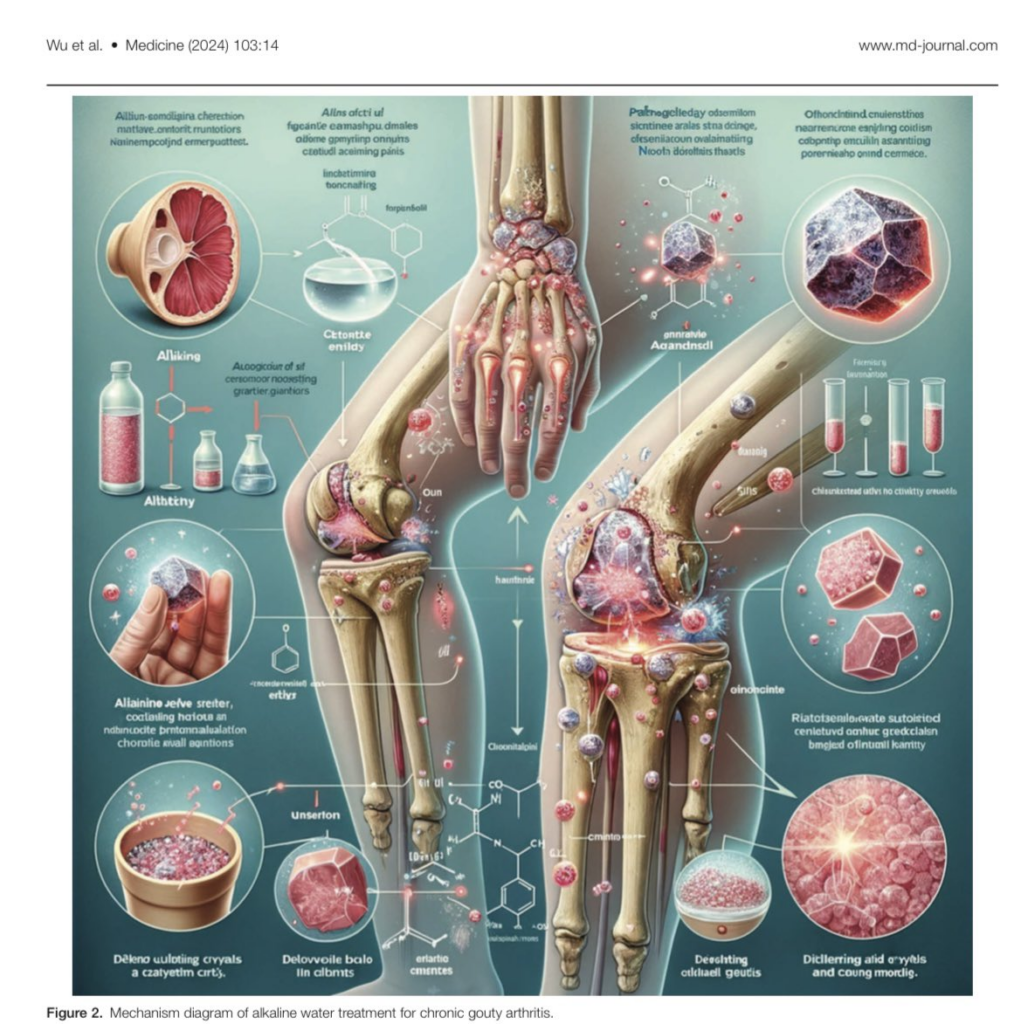

The paper, published in Lippincott’s Medicine, purported to describe a randomized controlled trial that found alkaline water could reduce pain and alleviate symptoms of chronic gouty arthritis.

Morgan Pfiffner, a researcher for Examine.com, an online database for nutrition and supplement research, first noticed the study while on vacation and posted on X about the erroneous diagram in the article.

“I planned to inform the journal when I got back, but that graphic was just too absurd not to share on social media once I saw it,” Pfiffner told Retraction Watch. Another X user found the paper’s introduction to be 100% AI-generated.

Commenters including Elisabeth Bik and Thomas Kesteman chimed in on PubPeer, echoing Pfiffner’s concerns. Bik pointed out that Figure 4 had the wrong number of bones in the lower leg and arm and had nonsensical labels such as “chlsinkestead atlvs no ctivktty greuedis” and “Aliainine jerve sreiter.” She also noted inconsistencies in the data and seemingly unrealistic methods.

Kesteman highlighted additional issues: Some of the references in the article do not appear in PubMed or Google Scholar, and the authors’ email addresses were not institutional – which, we note, is considered a red flag but not always a sign of problems. Further, the results of some statistical analyses were “virtually impossible,” Kesteman said, and data on pain scores in a table “present patterns that are impossible to find in real life.”

Yong Wu, the corresponding author of the study, told Retraction Watch English is not the team’s first language, and the cost for translation was prohibitively expensive.

“Therefore, our research team has resorted to using AI for text translation and refinement. Similarly, the expense of scientific illustration is beyond our means, which led us to use ChatGPT for generating research diagrams,” Wu said. “We apologize for any controversy this may have caused.”

A spokesperson for Medicine said the journal is continually improving its editorial review process. “We are working on a number of initiatives to help shape the future of medical research reviews based on collaboration with other leading publishers, dedication to peer reviews and leveraging new technologies,” they said.

“It seems to me that AI graphic itself was just the tip of the iceberg of research misconduct permeating that study,” Pfiffner said. “I’m glad it has been swiftly retracted.”

Like Retraction Watch? You can make a tax-deductible contribution to support our work, follow us on Twitter, like us on Facebook, add us to your RSS reader, or subscribe to our daily digest. If you find a retraction that’s not in our database, you can let us know here. For comments or feedback, email us at [email protected].

I’m surprised it passed peer review. Look how funny this image is!

The problem is almost certainly that when manuscripts are submitted to journals, the figures appear at the end of the manuscript (not in place) and/or submitted as additional files.

So some reviewers just skim the text and never look at the figures.

Bik is a genius. Who else would have known we don’t have calf bones?

Giant rat Penis redux. I love it. Thanks RW for information.

My favourite King Crimson bootleg album.

Bik can teach anatomy in a med school.

The applications of AI-assisted technologies are increasing in science publishing. RW reports an extensive use of undeclared cases:

https://retractionwatch.com/papers-and-peer-reviews-with-evidence-of-chatgpt-writing/

How about when authors declare that they used AI? are they good to go? if the researchers at Guangdong Provincial Hydroelectric Hospital declared in their article that they produced the figures with AI they had no ethical problem?

Currently, even the literature reviews are done by AI-assisted tools and declared by the authors, e.g., (cases that were discussed in Pubpeer)

https://pubpeer.com/publications/0C11AC2CA47828B2AA62E5498D5116

of course the result turn out to be strange using AI. But are there still ethics concerns if they declare the AI usage?

Dr. Biks first name is spelled: Elisabeth.

* Bik’s

https://x.com/RetractionWatch/status/1815834927277170978

A glance at some of the 36 PubPeer comments for “Yong Wu” (https://pubpeer.com/search?q=authors%3A%22Yong+Wu%22) appear to indicate more than just an issue with English.

I recently saw a presentation by a job candidate (a PhD with many years experience) who used figures that were clearly AI-generated. They weren’t as funny as this example or the giant rat penis, but certainly fictional and unreal. In and of itself, troubling enough. Worse was that my colleagues involved in the interview didn’t care when I pointed it out.